HSE 542 Project

Mobile App for Ergonomic Joint Angle Analysis of Real-Time and Recorded Visual Data.

Hari Iyer and Harika Kolli (Team 7)

iOS Application: ART Ergonomics

-

Live Analysis

-

Get joint angles in real time.

-

-

Batch/Historical Analyzing

-

Get ergonomics for batch or recorded videos.

-

-

Camera

-

Interface to the camera to record content.

-

-

About

-

Help and information for better usability.

-

Live Posture

-

Can be used for real-life or visual media.

-

Joint data recorded.

Sample Output Data

__C.VNRecognizedPointKey(_rawValue: right_shoulder_1_joint): [0.284539; 0.510343], __C.VNRecognizedPointKey(_rawValue: right_ear_joint): [0.211754; 0.520053], __C.VNRecognizedPointKey(_rawValue: right_upLeg_joint): [0.454197; 0.541903], __C.VNRecognizedPointKey(_rawValue: root): [0.449222; 0.576869], __C.VNRecognizedPointKey(_rawValue: left_eye_joint): [0.198062; 0.580942], __C.VNRecognizedPointKey(_rawValue: right_foot_joint): [0.795184; 0.333362], __C.VNRecognizedPointKey(_rawValue: left_forearm_joint): [0.254368; 0.854999], __C.VNRecognizedPointKey(_rawValue: left_shoulder_1_joint): [0.269123; 0.695943], __C.VNRecognizedPointKey(_rawValue: right_leg_joint): [0.665921; 0.694138], __C.VNRecognizedPointKey(_rawValue: right_hand_joint): [0.225972; 0.450450], __C.VNRecognizedPointKey(_rawValue: left_upLeg_joint): [0.444248; 0.611836],

-

-

Processing Recorded Videos

-

UCF 101 Dataset

-

PoseNet and Tensorflow models

-

Joint angles and positions recorded

Human System (Usability): In-built Recording and About (Help)

Data Collection

-

5 participants (3 males and 2 females). They were between 19 and 24 years old (M=21.8; SD = 2.28).

-

Culinary tasks performed

Bounding Box and Joint Angle Analysis

12 Culinary Tasks classified by Model

Data Collection 3-camera Setup (HiMER Lab)

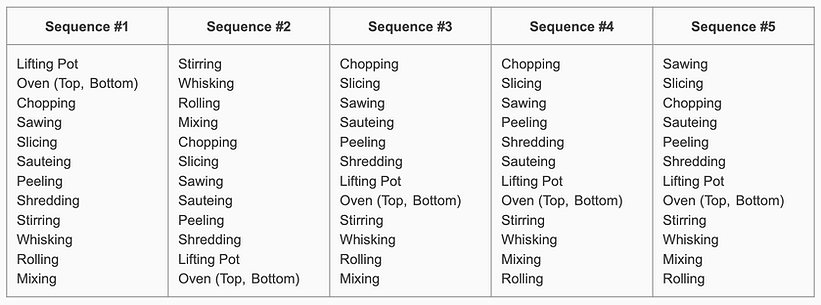

Variation of Task Sequences

All five sequences still maintained the optimal use of ingredients just like the original sequence, with the output of one task being input to an upcoming task.

Tensorflow (PoseNet): Fully Convolutional Neural Network (FCN) Model

-

PoseNet

-

TensorFlow model, uses heatmap regression.

-

-

Heatmap generation

-

Probability Heatmap to match pixel to joint.

-

Sigmoid activation function.

-

H(x, y, j) = sigmoid(g_j(x,y)), (g_j(x,y) is the output of the last

convolutional layer for joint j at pixel location (x,y)).

-

-

-

Offset vectors

-

O(x, y, j) = (dx, dy), (dx and dy are offsets from the center of the joint

to the actual joint location).

-

-

Pose estimation

-

Location of the max value in the heatmap for each joint, and adding the corresponding offset vector.

-

(x_j, y_j) = argmax(H(x, y, j)) + O(x, y, j) where argmax returns the location of the max value in the heatmap for joint j.

-

-

Loss function

-

L = λL_pose + μL_heatmap, (μ + λ = 1).

-

Sequential Convolutional Neural Network (S-CNN)

-

Ambiguous (Similar) Tasks

-

Chopping

-

Slicing

-

Sawing

-

-

Epoch 30/30: loss: 0.0258 - accuracy: 0.9931 - val_loss: 0.0026 - val_accuracy: 0.9996

-

The overall accuracy of the model converged between 97-99% (See Figure 4) for classification for unforeseen data.

S-CNN (Contd.)

-

Convolution

-

Frames are linked with a set of learnable filters.

-

Generated feature maps with pre-processing.

-

-

Activation

-

Introduced non-linearity into the CNN.

-

Rectified Linear Unit (ReLU) Function.

-

-

Max Pooling

-

Downscale feature map dimensions.

-

Max value within a small window of the feature map.

-

-

Fully connected layers

-

Flattened feature set.

-

Learning based on features extracted.

-

-

Softmax

-

Probability distribution as output.

-

Highest probability reported as prediction.

-

ART-C Statistical Analysis (t-tests)

-

Manual and automated ART measurement.

-

Back Posture (C2) and Hand/Finger grip (C5) had poor camera visibility.

-

Data from all 12 culinary tasks for 5 participants.

Outcomes

-

New learning experience

-

Mobile application

-

Video data collected

-

Output of the CV and Learning algorithms

-

Statistical Analysis

-

Graphs and Charts for trend analysis

-

Project hosted on private GitHub

-

Journal publication (In progress)

GitHub Repository

References

-

Health and Safety Executive. (2010). Assessment of repetitive tasks of the upper limbs (the art tool). HSE Books. Retrieved from https:// books.google.com/books?id=bMiduAAACAAJ

-

Li, Ze & Zhang, Ruiqiu & Lee, Ching-Hung & Lee, Yu-Chi. (2020). An Evaluation of Posture Recognition Based on Intelligent Rapid Entire Body Assessment System for Determining Musculoskeletal Disorders. Sensors. 20. 4414. 10.3390/s20164414.

-

Iyer, H., Reynolds, J., Nam, C.S., & Jeong, H. (submitted). Ergonomic Assessment of Repetitive Tasks for Culinary Work using Computer Vision. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting. 67(1), p.p. xxxx-xxxx. SAGE Publications.

-

Apple (iOS): https://developer.apple.com/

-

Tensorflow: https://www.tensorflow.org/